How One Website Used A/B Testing to Generate Desired Metrics

As video calls become the new normal, chances are, you’ve heard of Houseparty . The face-to-face social networking app for mobile and desktop is currently one of the top 3 social networking apps in the App Store and one of the best ways for us to stay connected with our loved ones.

Read on to learn about their mobile A/B testing process, experimentation best practices, and Jeff’s tips for creating a data-informed culture at your company.

p.s. Want to read this later? Download a pdf version of this story here.

Houseparty’s Mobile A/B Testing Methodology

The Houseparty team runs weekly experiments on their mobile and desktop apps. They collaborate with engineering and marketing to decide what to test, from simple copy and visual changes to switching up entire user flows with 16+ variations.

Houseparty prefers to introduce small changes to their app over larger ones. Implementing big changes can cause unpredictable fluctuations to key metrics. It’s also more difficult to understand the direct (and indirect) impact large updates have on engagement levels compared to rolling out smaller tweaks over time.

Houseparty uses Taplytics to quickly bucket users into segments to execute their experimentation program. Experimentation helps them understand what changes are impacting usage across the app and quickly validate product decisions. Previously, the team relied on complex calculation models that weren’t directly tied to usage data to determine if updates were having a positive impact.

“We would make changes, and see some metrics go up, and some go down. But we wouldn’t be able to pinpoint a fix because we didn’t have the specific metrics on each element,” says Jeff. “Now, when we make changes in our product, we can clearly understand the impact on the entire user experience. We know the upsides and the downsides of every change.”

Houseparty’s Top Mobile A/B Testing Use Cases

1. Growing Opt-in Rates Through Better Mobile Onboarding Messaging

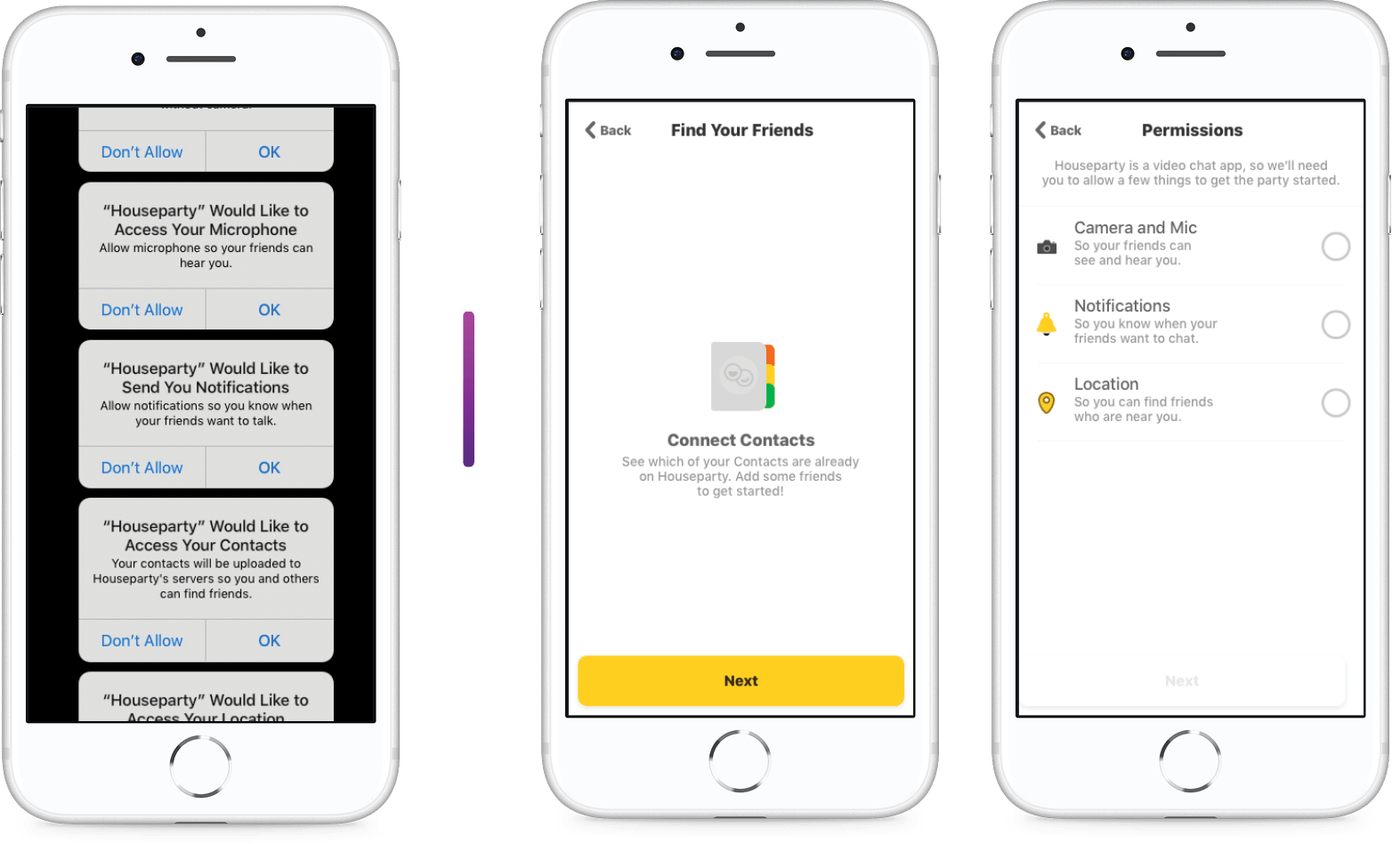

Houseparty has gone through multiple iterations of their onboarding funnel to increase opt-ins for push notification permissions, number of friends added, and other behaviors that lead to long-term usage and higher engagement rates.

They tested adding messaging before the app requested access to users’ cameras, mics, contact list, and location. They hoped that if users understood the benefits of opting-in before they were presented with the permission request, they would be more likely to grant access instead of automatically declining (which they often did out of habit).

Next, they tested using a checkbox list for opting into camera, mic, push and location access to improve the user experience and reduce the perceived length of time for onboarding.

Some permissions worked better as checkboxes and made onboarding feel shorter.

This change increased push notification opt-in rates by 9% and contact list access by 15%.

“We ended up with a net benefit of improved retention and improved activation,” says Jeff of their onboarding flow experiments.

Psst! Want more experiment ideas? Get our mobile A/B testing guide.

2. Driving Mobile User Connections—and Retention—with Targeted Testing

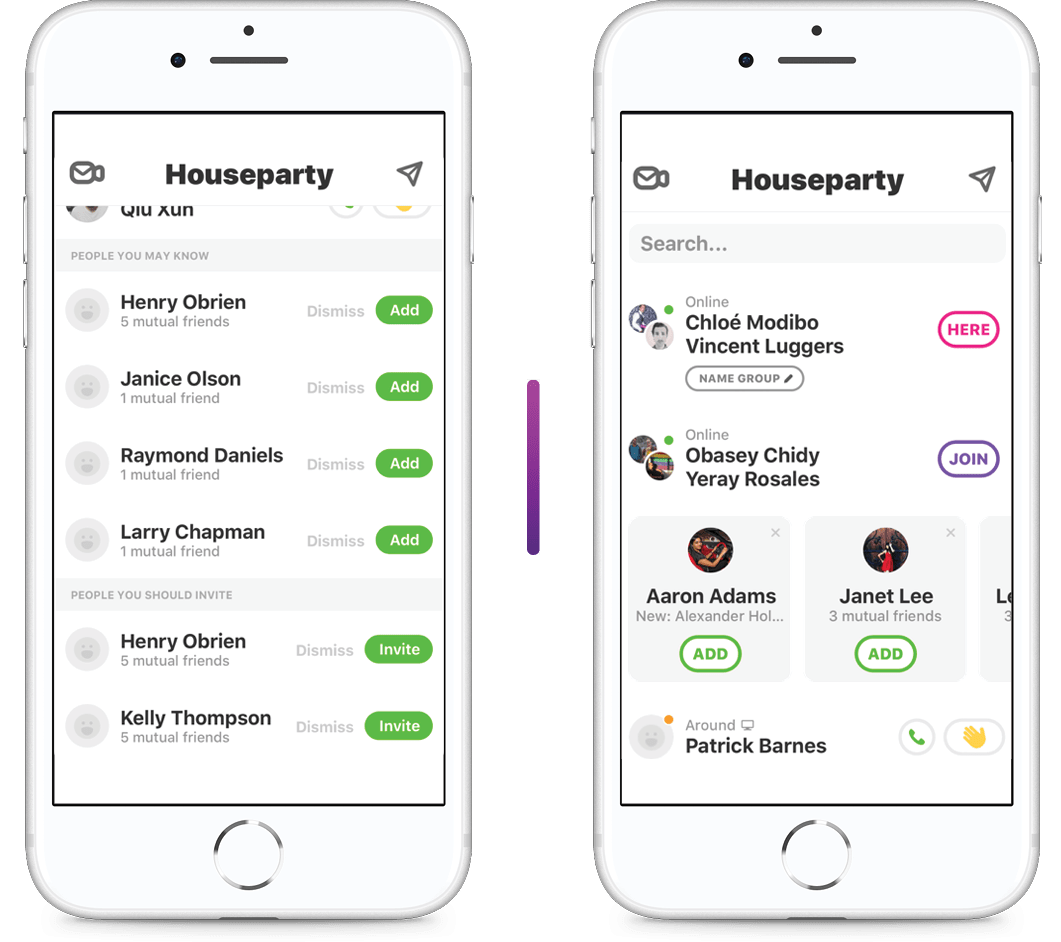

Houseparty analyzes experiment data to see how changes impact specific users. They may base this on new vs. existing users, country or device. “We’ll also do tests where we restrict people based on only new users if we don’t want to compare them to existing users,” says Jeff.

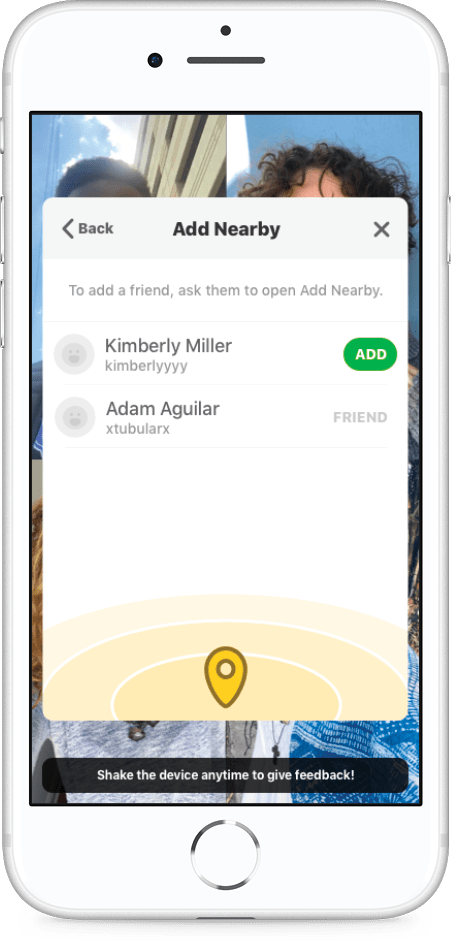

For example, they’ve tested how they surface friend suggestions, and changed the timing and messaging of those requests based on how long someone has been using the app. They’ve also experimented with sending tutorials to users in their first few weeks if they aren’t using a certain feature, like adding contacts or connecting the app to their other social media accounts.

Through testing the way they’ve introduced the “People You May Know” feature, Houseparty has doubled the number of friend requests sent by new users in their first day in the app.

3. Testing Timing & Staggered Feature Rollouts

The Houseparty team uses Taplytics’ targeting capabilities to release significant updates to specific markets before launching to their entire user base. This way, they can see how users interact with new features before releasing it to everyone.

They’ll also test the timing of how and where they introduce new features. “We’ll test something as small as ‘should this notification pop up after three seconds or five seconds or 10 seconds?’” says Jeff.

“Before Taplytics, when we rolled out new features without testing them, we weren’t sure if changes in engagement levels were because of those updates, or because of something else, since our testing was built on time-based comparisons,” says Jeff. “Taplytics helps us make decisions like what product features to keep or kill. We couldn’t move as quickly as we do without it.”

How to Create an Experimentation Culture

Here’s Jeff’s advice for building a mobile A/B testing process at your company.

1. Assemble your A/B testing team and get buy-in early on.

Experiment ideas can come from any part of the business. Engineering, product management and marketing are heavily involved in coming up with experimentations and contributing variations for tests. “Everyone’s helping make product decisions,” says Jeff. “We have a very cross-functional environment.”

To get your team onboard, Jeff suggests showing them results early on—whether they’re positive or negative—so they understand the power A/B testing can have.

“Engineers like math. You will get buy-in if you have actual results to show them versus anecdotal evidence. Make sure you can show them the statistical significance,” says Jeff. Marketers will appreciate a similar approach. “Giving marketing the ability to have more quantitative results is also really helpful because a lot of the time their work is more qualitative.”

2. Have a plan for consistent data analysis.

Houseparty analyzes their test results based on weekly cohorts. “A user who enters your experiment on Saturday might have very different behaviors than a user who enters it on Wednesday,” says Jeff. “So we always analyze results in groups of seven days so that we’re always looking at a consistent group of people, and we know that everybody in that group could have been in the app for at least the same amount of time.”

This method ensures results aren’t skewed by only the most active app users. “It takes a little bit longer to get statistical significance, but you have consistent, healthier results,” says Jeff.

Jeff says it’s also important to be aware of the direct and indirect impact your tests have on metrics across your app—not just the ones you expect to be influenced. “Sometimes we’ll do an experiment, and it hurts one metric but improves three others,” he says. Their solution is to find a variation with the best overall net improvement. “We’ll continue to iterate until we find one that doesn’t impact any metrics too negatively. But just knowing if there’s an impact is helpful.”

3. Be data-informed, not data-driven.

Jeff recommends using test results to inform your mobile development decisions—but not completely drive them. “If you’re completely data-driven, you’re going to end up like a robot with spammy stuff that kind of works but isn’t great for users,” says Jeff. “You want to keep the human touch in your decision-making process. So, love the data—but don’t let it absolutely dictate every single thing you do.”

While the team tries to ship only experiments that have positive results, what Jeff finds more valuable is data that shows them which changes they should avoid. “We’re testing to make sure changes aren’t majorly detrimental, and so that we understand what’s happening overall. It’s not always about necessarily getting the ‘best’ results every time. Sometimes it’s what you don’t ship that’s important.”

4. Don’t forget about user data privacy.

Maintaining trust by properly handling user data is important. That’s why Houseparty doesn’t collect any video of users in-app or their conversations. Instead, they analyze anonymized in-app events to find trends.

“I don’t care what our users are talking about,” says Jeff. “I care about how many times they’ve talked to a friend before or how many friends they have. The engagement interaction data is what’s valuable.”