Streamline user’s digital experience in banking, fitech and insurance companies

Maintaining loyalty is an ongoing challenge for banks and fintech companies, especially during key points in the relationship, such as account creation and onboarding. When today’s tech-savvy consumers expect a fast and streamlined digital experience throughout the cross-platform customer journey, every interaction matters.

When you combine this with the simple fact that consumers worry more about their money and personally identifying information (PII), being able to refine the online banking experience at these touch points is crucial for your success as a business. That’s where A/B testing, also known as split testing, can help. But understanding how to leverage A/B testing best practices to create a winning experience for your online banking or fintech company isn’t easy.

Any perceived issues in the digital experience with your company will have a negative impact on your relationships with customers. In fact, 33% of Americans would consider switching services due to a poor customer experience. The stakes are high, so each experiment you run has to provide value to consumers as well as your business.

Run A/B Tests to Keep Up with Customer Expectations

In order to keep up with changing customer expectations in the fintech space, it’s essential that companies keep up with the latest A/B testing best practices so that they can satisfy the demands of their customers.

Consider the following scenario:

A potential borrower starts an application for a home loan on your website. As they go through the process of learning more about their options, they land on a page that displays multiple loan options and interest rates. The borrower has no idea what the different loan options are, or why some rates are lower or higher than others. Looking for help yields no results because there’s no real educational material on that page to help clarify their options. As a result, this potential borrower feels overwhelmed and closes the browser tab, leaving the application unfinished.

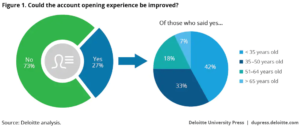

This isn’t all that surprising, according to a 2017 Deloitte study, “Conventional wisdom suggests that the account opening process at many banks seems to have become burdensome, due to heightened regulatory demands and compliance and control pressures.”

As you can see, the large majority of consumers who want to improve the online banking experience are younger than 35 years, which is the growing audience modern banking and fintech brands need to target.

Deloitte’s research shows that even small tweaks to help customers move through complex account processes faster has a positive impact on overall customer satisfaction. A/B testing enables marketers to catch and correct these issues before they lead to a decrease in potential borrower engagement. Using cross-channel experiments, savvy organizations will be able to include more educational content on the page proactively, whether it’s by linking to information directly, offering modal pop-ups to share helpful context, or including a “Contact Us” option directly on the page.

A/B Testing Best Practices for Banks and Fintech Brands

Conducting A/B tests on your website, landing pages, offers, and online applications helps refine the online banking experience for your customers and creates more value for your business. Here’s a rundown of our top A/B testing best practices to help ensure you’re creating experiments that focus on the things that matter.

Use Customer Feedback to Identify Areas of Opportunity

The first step toward creating an A/B test is understanding what needs to change with the current experience. You don’t want to risk making changes to something your customers don’t care about. Remember, banking and finance consumers are more risk-averse due to their higher data security demands.

Online abandonment is one of the biggest challenges facing banks and financial institutions. According to SalesCycle, “Finance abandonment rates were an average of 75.7% for Q3 2018.” If you’re experiencing a drop-off in customer engagement from specific pages on your site, it’s important to understand why. You do that through customer feedback.

There are several methods to get feedback from your customers, such as:

- Adding an exit survey on loan applications or landing-page offers, asking customers why they did not complete it

- Adding an exit survey on your thank-you pages, asking visitors what they liked and did not like about your onboarding or application process

Feedback gives you insight into what areas of your onboarding processes need improvement. Maybe applications are too long or confusing. Maybe there isn’t enough information or reassurance to make visitors feel secure. The only way to know is to ask.

Set Up Your Control and Test Cases

In A/B testing, you need a control and a test case. Otherwise, it’s impossible to know whether your changes had the intended impact on the customer experience. The control is your current website without any changes. The test case contains the change you want to test. An example might be testing if an informational pop-up on your home-loan landing page would help convince customers to take the next step and fill out a loan application.

In this case:

- Variation A is the control case, i.e., the landing page in its current form.

- Variation B is test case (or treatment), i.e., the landing page including a pop-up.

When setting up the control and the test case, test only one element at a time. Multivariate testing can potentially save your team time in the long run, but it makes analysis of the results more difficult. Changing too many aspects of the online banking experience can also negatively impact trust in your brand by highlighting inconsistencies for individual customers.

Test Variables Across Platforms

The modern online banking experience spans multiple touch points across mobile and desktop devices. Using A/B testing to refine that cross-platform experience requires a clear and comprehensive understanding of how potential customers interact with your company throughout their journey.

Let’s say you’re testing out new language for the call-to-action (CTA) on your your home-loan application page. Because this page is accessible on both mobile and desktop, it’s important to have the similar test subjects in both groups. This doesn’t mean you need the same users to access both your mobile app and desktop website; it means that those who encounter the A/B test variants share common characteristics or behaviors. With customer intelligence, you’ll be able to define the specific cohorts you want to engage with your experiment.

When potential customers move between their devices midway through the sign-up process, they’ll expect to see the same kind of information presented on both.

Keeping these variables the same also makes it easier to understand the results of your A/B test.

Set a Repeatable A/B Testing Cadence

Gaining valuable insights from your experiments is only possible when you create a well-defined process for how your team gathers data from A/B tests. If you run your control version one week and then run the test version next week, you won’t know whether the results you’re seeing are due to the test variable or factors due to the timing of the test, which could throw your results completely off.

If you’re a home- and auto-loan provider setting up a test of the written copy in your mobile app, outside variables could impact results if you run tests at separate times. Say you ran test A one week, and test B the week after, but interests rates rose by 0.3% in week two. That increase would directly impact your potential customers’ willingness to sign up for a loan and would skew your results accordingly. Keeping the tests concurrent mitigates the risks of those kind of discrepancies.

While there’s no hard and fast rule on how long you should run an A/B test, it’s important to run the test long enough to obtain a large sample size. How long is enough? That really depends on how much traffic usually lands on your website or mobile app. As a general rule of thumb, we recommend running your A/B test for approximately two weeks. It’s important not to let early results sway you and to not alter the test midway through.

Interpret the Results of Your A/B Tests

Every A/B test you create will generate lots of data about how customers interact with your product or service—getting real-world business value from that data is possible only when you can trust the results.

That’s why it’s important to calculate statistical significance to determine whether the results of your test were due to the variant and not another factor. Statistical significance is the way you determine whether or not the results of a given experiment were the result of your intentional changes or a happenstance of other random variables.

That’s why it’s an A/B testing best practice to run tests for at least two weeks. The longer you have to collect data, the more insights you’ll be able to gain as a result.

Here’s how to calculate statistical significance using an example:

- Variant A/control is an existing landing page without an information pop-up. During testing, 1,000 people visited Variant A, and the page converted 50 visitors, for a 5% conversion rate.

- Variant B/test is the same landing page, with a pop-op offering more context about your product. During testing, 1,000 people visited Variant B, and the page converted 130 people, for a conversion rate of 13%.

5% ÷ 13% = 38.4%

Based on these numbers, Version B/test converted 38.4% more visitors than Variation A — 38.4% is your statistical significance, making Variant B the winner. The good news is, Taplytics will calculate the statistical significance of your A/B tests for you, so you don’t need to do it yourself.

Pick the Right A/B Testing Tool for Your Needs

If you’re a financial marketer looking to improve your conversation rates, the right testing tools can make all the difference. We believe your time is better spent building the best hypotheses, not spending hours trying to learn a new technology.

When it comes to choosing an A/B testing tool, it’s important to consider a range of factors, including the experience and reliability of the provider, along with usability, security, and features. For example, is the product easy to install, and is the interface easy for nontechies to use? How many customers or experiments can be supported at the same time? Does it provide real-time tracking and flexible reporting?

For banks and financial companies looking for a comprehensive A/B testing suite, Taplytics can help. We offer a comprehensive suite of tools that allows you to run A/B tests for your website, landing pages, applications, forms, email campaigns, and more — all from a single platform, and all without writing a line of code.