How to Find Your Own A/B Testing Best Practices

The most important thing you can do when launching a new A/B test is to be judicious about which tests you run. Use a framework (like the one below) to prioritize your tests so you select the ones with the highest impact to your quarterly KPIs and build A/B testing best practices. Begin by creating a test idea backlog.

It All Begins With the Backlog

You team should always be building a backlog of ideas for tests you want to run, perhaps in partnership with your agency, or testing platform vendor. Create a shared document and nominate someone as owner. (Or curator. Or czar. Or champion. Your call.)

Whenever the idea for a test arises, add it to the backlog, and periodically meet to rank the ideas in order of priority and potential. Each time you rank them, delete the bottom 20 percent of ideas. If you aren’t constantly pruning your backlog, it can grow overwhelming, and will make the idea of running tests seem more daunting than it actually is.

Examples of A/B tests to consider:

- eCommerce or retail: Test featured items, promotions, button placement, offers

- Foodservice: Test push notifications, text notifications, checkout design, rewards

- Media: Test show length, ads placement, show controls, favorites lists

- Finance: Test message copy, put offers above or below the fold, test emails

- Education: Test recommended products, messaging

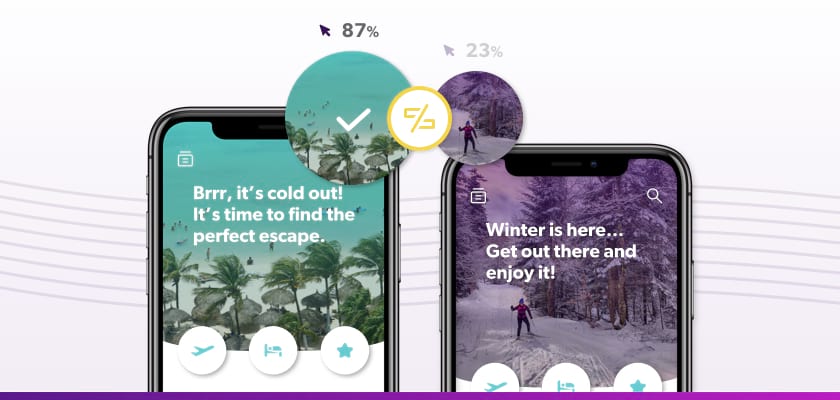

- Travel: Test images, offers, creative, push notifications

- Gaming: Test on-boarding, in-app messages, push notifications

If you’re not sure where to begin, run a technical test to see that your site or app works on all devices. Your product or engineering team may have built it for the top devices and browsers. But does it work on all? Fixing any technical bugs can be an easy way to lift conversions and save some confusion later on.

Now, each test has a different potential. Treat them accordingly. For instance, testing a feature change low in the funnel where there’s very little traffic may have less impact than changing one higher in the funnel, and may not be the best use of your time.

Each test also requires different support. Renaming a form field takes a lot less work than redesigning your checkout page. To prioritize tests, get all that information about them down in writing so you can rank them.

Write Each Test On a Sticky Note

Write each of your test ideas on a sticky note (real ones are great, but virtual ones work too) with four pieces of information: A description of the experiment, funnel stage, user flow, and goal.

Stick all your team’s notes to a whiteboard divided into four quadrants where the x-axis is effort and the y-axis is impact. This isn’t a science: Relative decisions are fine, and you can always move them. Sometimes it helps to just place the first one, then decide where others fit in relation to it.

Once all your tests are on the whiteboard, you know which to run. Start with those in the top left quadrant (labeled “Quick wins”), which are no-brainers: They can have a large impact on your KPIs and don’t take much effort. Notes in the top right quadrant are next. They’re high impact, but also high-effort. Brainstorm ways to break them down into quick wins so you can validate the idea before investing resources.

Notes that fall into the bottom two quadrants are probably not worth your team’s time, at least right now. Though that can change. If your company’s priorities shift, you’ll rearrange the board.

Snap a photo and reorder all the test ideas in your A/B testing backlog to match the board: Top left (highest priority) to top right (lowest).

Set Up Your Test

Now you’re ready. The sticky note for your first test tells you most of what you need to know: Your experiment idea, the stage of the funnel, the user flow, and the goal. Implement the test in your A/B testing platform, ideally without involving too many of your team’s resources.

A tool with an intuitive interface and a Visual Editor can make all the difference between whether the product and marketing teams can run their own tests, or whether they must wait for an engineer to help them code it.

Best Practices for Setting Up A/B Tests

Segment your audience: Segmentation is dividing up your audience by a characteristic like a geographic location or purchase date. Not all users react the same, and segmentation lets you get specific about who you’re testing for and learning about.

Most companies start by segmenting their audience based on a positive or negative behavior, such as a purchase or unsubscribe. As a team improves at testing, their segments typically grow narrower, and they can save unusually high or low-performing cohorts to track over time.

Technical things to think about: Don’t run multiple tests to the same sample of the same audience at the same time. It seems obvious, but testing too much at once with that group means one test can interfere with another. (Your A/B testing platform should have safety measures to prevent this from happening.)

Aim for statistical significance: To learn from your tests, you have to know that whatever you learned testing on a sample of one audience applies to the whole audience. Your A/B testing tool should calculate this for you—make sure your tests meets the proper threshold before you share the results.

The Zappos Testing Case Study

The eCommerce leader Zappos has identified experimentation as a key driver of its growth, and as such, has committed to a culture of experimentation. The team there wants to push the boundaries of what’s possible with testing to drive the greatest impact to its key business goals, like top-line revenue.

Taplytics supports Zappos’ experimentation cycle in four ways: It arranges quarterly meetings (with bi-weekly check-ins), manages the backlog, conducts post-mortem reporting and analysis, and offers iterative suggestions to improve tests.

The Zappos team is focused on the Retention and Revenue stages of the testing funnel. For each test idea they generate, they take an iterative approach that flows from validation (try a quick win) to elaboration (try a deeper experiment with more resources) to iteration (apply those lessons to other areas of the app) to building (build or rebuild features and products based on what they learned).

All this allows the two teams to build an experimentation services project plan where they know what test they’re running an entire quarter in advance. As far as A/B testing best practices go, Zappos’ model is pretty hard to beat.