Save Time By Creating a Repeatable A/B Testing Process

To build A/B testing best practices for your team, you have to turn your test results into repeatable knowledge. That means deciding whether to keep, kill, or scale each test idea, and applying what you learned to conduct more tests. Cumulatively, those lessons should guide your product development. The strongest features are discovered through experimentation—and it starts bottom-up.

Why do we do A/B testing?

Product teams A/B test to prove causality. That is, they try to prove that changing something has a reliable effect. As one product leader without an A/B testing platform put it, “We would make changes and see some metrics go up and some go down,” but they wouldn’t know what caused what. A/B testing removes all doubt.

Use best practices to guide your product road map

The cumulative result of all your team’s A/B testing should be a superior product. One that’s more intuitive, that users enjoy, and which increases sign-ups and purchases (or whatever your quarterly KPI is).

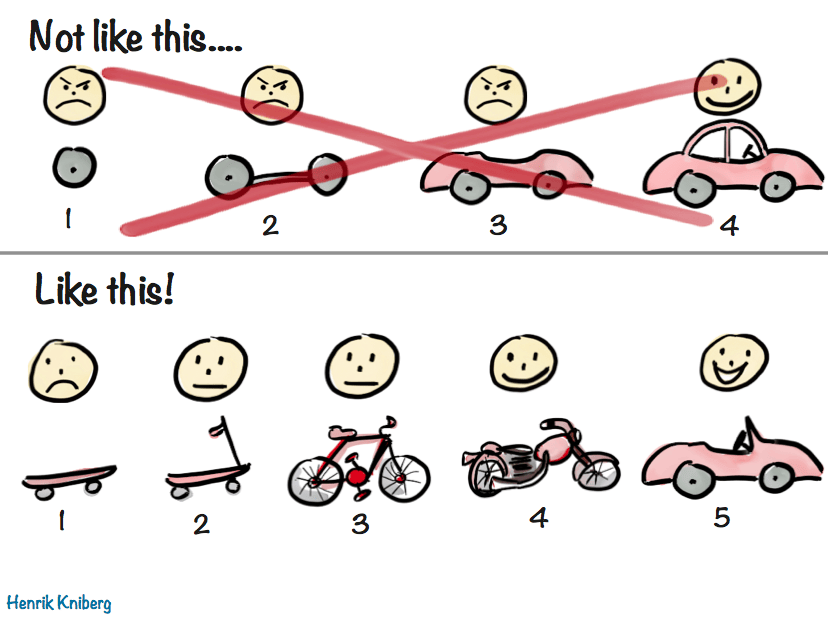

Teams don’t arrive at perfection with one big blockbuster test, but rather through an iterative testing process. That means starting small, with tests that take little to no design resources, and building up to bigger and bigger tests until eventually, you’re altering features and the overall direction of the product.

Iteration de-risks the testing process, and keeps users happy along the way. With blockbuster tests, like building a brand new app from scratch, you take a big gamble. Users either love it or hate it, and if they don’t adopt it, it’s hard to learn about which parts they do and do not like.

[Image credit Henrik Kniberg]

Think of each test as the act of gathering bits of user truth. Maybe they like shorter checkouts, dislike cluttered pages, and prefer subdued, neutral tones. Over time, a picture emerges of who those users are and it gives the team an intuition about what they like.

The closer the app or site reflects their preferences, the more successful it’s likely to become. A/B test-driven product design is a bottom-up process, where the team running the tests informs leadership what users want, and recommends what to build.

The worst thing that can happen to a product is for leadership to impose a top-down roadmap. A leadership team that gives unilateral feature direction based on their (often incomplete) view of users’ needs will lead the product astray, make users less happy, and encumber the team with technical debt.

Read “The Ultimate Guide to A/B Testing” now

Are there exceptions to the iterative testing rule?

Nope. There’s nothing that doesn’t benefit from iterative testing. If you skip the iteration process, it should only be because you have an existing deep body of evidence accumulated from past tests.

No feature is too big to be iterated. Take the team at a big retailer that wants to build a personalization engine for their eCommerce site. Rather than invest nine months of the engineering team’s time upfront, they can simply use their A/B testing platform to add a menu button that says “See your top picks.” If that earns clicks, they can build a minimum-viable personalization tool that simply filters top picks. Only if that goes well, earns high engagement rates, and they have reason to believe there’s a return on building a personalization engine, should the team go ahead and build the whole thing.

Here’s how a product manager from a ride-hailing service describes how they launched an inexpensive iterative test to build a case for a larger feature:

“I’m excited to use A/B testing to start making suggestions to our riders. It’s cool because we don’t need a special algorithm or an entire machine learning team to get it launched. It’s using our search filter and A/B testing tool. Then, we’ll use the results of the tests to fine-tune the suggestions. The development of the suggestion feature is going to be reactionary based on real user data versus making it a big project.”

Quick wins and iterative tests build toward knowledge which builds toward features. And if teams are really smart, they’ll apply what they learn throughout those iterative tests to make other features of the product better.

Spread the knowledge

If you know something works well in one part of the product, it stands to reason that it should work for the same users in other areas. Plan additional iterative tests to apply what you’ve learned.

- If users use the chatbot on your homepage to process returns, let them place orders through it

- If your users respond to customer satisfaction surveys at an unusually high rate, see if they’ll answer product surveys

- If users respond well to a colloquial, emoji-laden error messages, use that tone in their regular order updates

Every lesson your team learns and records is knowledge that can probably make some other part of the product better.

Conduct quarterly check-ins

The fast food brand Chick-Fil-A conducts quarterly check ins on A/B testing for its mobile app, which now has 11 million downloads. At the check ins, the team, its agency, and experts from Taplytics ensure that its tests are aligned with the app’s quarterly KPIs.

Together, the teams:

- Discuss the product roadmap and any KPI changes

- Check on how the previous quarter’s tests are performing

- Discuss what everyone has learned (especially about why users do what they do)

- Add new ideas to the backlog

- Prioritize ideas for the upcoming quarter

The Chick-fil-A team leaves these quarterly check-ins with testing roadmaps to iteratively validate new feature ideas. For example, a new native checkout feature, or a new payment flow.

“With Taplytics’ visual A/B testing solution, we saw a huge bump in credit card purchases once we introduced our new payment flow,” said Jay Ramierez, Product Owner at Chick-fil-A. “The number of customer payment option complaints dropped to zero, meaning customers are much happier with their Chick-fil-A experience.”

Quarterly check-ins keep the company’s app on track, and iteratively improve it nearer to perfection.

Chick-Fil-A increased mobile payments 6% with testing: Read the story here.

Learn More with Taplytics

Taplytics makes A/B testing easy with our no-code visual editor which allows your team to run A/B tests without relying on engineering resources. Get started with a free trial.